ThinkPad X1 Extreme Generation 4 Review

Major changes in the high-power model of the X1 line

Overview

This review is an analysis of the fourth generation X1 Extreme, a high-power laptop in the X1 line of computers. The X1 designation in ThinkPads is reserved for computers that combine excellent performance, style and build quality. The initial X1 offerings were simply called X1 and X1 Hybrid, and were introduced in about 2012. The following year, the model’s name was changed to X1 Carbon, due to its carbon fiber case. About 3 years later, touchscreen models were split off and named the X1 Yoga, which included a 360° hinge. All those models used low power (15 watt), mobile CPUs. In 2018, the X1 Extreme was introduced at approximately the same time as the compact mobile workstation model P1. Both these computers had H-series 8th generation, 45-watt Core i7 CPUs and a 15.6” screen. The major difference between the P1 and X1 Extreme was the graphics chip. The P1 used NVIDIA® Quadro graphics processors (GPUs) and the X1 Extreme used GeForce chips. This is not my area of expertise, but my understanding is that the P1 was a better choice for applications like AutoCAD, while the X1 Extreme’s target audience was heavy users of graphic design, photography, multi-media and other applications where frame rate was a serious consideration. An internet search will provide various comparisons that can give you a better explanation than I can provide. The following two years brought 9th and then 10th generation CPUs which were used in the X1 Extreme generations two and three. There was also an optional i9 processor added at that time, as well as screen resolutions of up to 3840X2160, including an OLED touch option.

The fourth generation of the X1 Extreme has brought major changes. The 11th generation Intel CPUs brought significant performance improvements in all low-power CPUs, so I was curious whether the same improvements would apply to the H-series, higher-power mobile CPUs in the X1 Extreme. There are three 45-watt processors available on the X1 Extreme: an i7-11850H (vPro), an i7-11800H (non-vPro), and an i9-11950H (vPro). There are five graphics options. The model can also be configured without a discrete GPU, instead, relying on the graphics routines integrated into the CPU (Intel® UHD Graphics for 11th Gen Intel® Processors). There are also four discrete GPU options, all in the high-performance NVIDIA GeForce RTX 30 series: the RTX 3050Ti, 3060, 3070 and 3080. Modern laptops’ CPUs and GPUs are soldered to the motherboards, as opposed to older machines where these chips could be separate parts. Soldered components allow machines to be thinner and lighter. The downside of soldered components is the large number of possible combinations, each needing a different motherboard. Three CPUs and five graphics options would allow 15 combinations. If you have three windows license options in the BIOS, (Pro, Home, none) 15 becomes 45. Sometimes, a new processor becomes available from Intel and it gets added as an option. In this case, that would necessitate 15 new motherboards. Stocking parts for assembly becomes a very difficult problem, and management of replacement parts becomes impossible. Therefore, the available combinations will be limited.

Besides the upgraded CPU and graphics processor, the aspect ratio of the screen has changed from 16:9 to a taller 16:10. At the end of this review, I will include a short section that I wrote to explain how screen aspect ratio affects usage. Much of the material is copied from another review I wrote earlier this year for a different machine. I think it is worth repeating, but it can certainly be skipped if you wish. The optional high-resolution touch screen has the same number of pixel columns as the high-resolution generation 3 machine, 3840, but the taller screen has 2400 rows, rather than 2160. The screen size has increased from 15.6” to 16”. My research could find no screen manufacturers making 16” high-resolution OLED screens. Therefore, this year, the optional touch screen is described as UHD+ IPS Touch 3840×2400, 600nit, HDR400, Dolby Vision, 60Hz, Low Blue Light, 8+2-bit color. This IPS LCD screen replaces the OLED screen previously offered. A non-touch UHD+ screen is also available, as well as a QHD+ (2560X1600) non-touch panel. There was also a large improvement in the graphics chip offering. The generation three machine used an NVIDIA GeForce GTX 1650. This year, the four discrete options, all in the more-advanced RTX line, should provide a much better user experience. I also expect that they will work well with CAD and image manipulation software. As always, if your work or daily activities rely on unusual or specialized graphics compatibility or support, it is always best to confirm GPU compatibility with your software’s publisher.

I find it confusing that NVIDIA uses names for its laptop graphics chips that are the same as those used for desktop graphics cards. Using the RTX GeForce 3080 as example, an RTX 3080 desktop graphics card draws 320 watts by itself. The largest power supply that is used with an X1 Extreme is 230 watts. The X1 Extreme’s CPU uses 45 watts by itself. There are lots of other parts that use electricity, so it is obvious that there will not be 320 watts available for the GPU. To complicate the situation, NVIDIA allows the computer maker to configure a laptop GPUs to draw different amounts of power. The relationship between power usage and performance is not linear, so a 100-watt GPU cannot do twice the work of a 50-watt GPU, but there is a connection.

Lenovo has generously supplied me with a very well-configured X1 Extreme for this review. The machine I tested is based on an Intel i9-11950H, 11th generation, H-series, eight-core, sixteen-thread, 2.6 gHz CPU. This CPU has a maximum Turbo frequency of 5 gHz and has a 24MB cache. Intel refers to the 11th generation H-series CPU by the codename Tiger Lake. Tiger Lake CPUs are built on a ten-nanometer (10 NM) die. Earlier generation CPUs were built on a 14 NM die. The numbers are called lithography figures and tell you how close each transistor is to its neighbors. A lower number means that more transistors can be packed into a given size.

My test machine has the NVIDIA GeForce RTX 3080 GPU with 16GB of video memory. It also came with 32 GB of DDR4 SODIMM memory (2X16GB) and a UHD+ (3840×2400) IPS 600nit, HDR400, Dolby Vision, 60Hz, Low Blue Light, 8+2-bit color multitouch screen. There are two USB 3.1 Gen1 ports and two ports that support USB-C and Thunderbolt™ 4 connections. One of the USB 3.1 ports is always on for charging a phone or another device. The computer has a full-sized SD card slot, a slot for a security cable, an audio combo jack and a full-sized HDMI2.0/2.1 port. Networking is handled by an Intel Wireless-AX-210 adapter that combines WiFi 2 x 2 AX and Bluetooth® 5.2. Windows 10 Professional was preloaded. For storage, the computer has a fast, 2 terabyte Samsung MZVL22T0HBLB-00BL7 Performance SSD. This SSD uses a generation-4 PCIe interface, which increases the data transfer rate to be double that of PCIe generation 3 drives. My X1 Extreme test machine has a hybrid camera that combines an FHD webcam and an IR camera for facial recognition. The camera has a mechanical shutter for privacy. Now that video conferences are a part of everyday life, the FHD camera should provide a much better image and, thus, a better user experience.

Specifications

The X1 Extreme I tested weighs 2110 gm or 74.4 oz. The weights do not include the charger. There are slight weight variations depending on configuration. I would expect touch-screen models would be slightly heavier than non-touch models, since they have a digitizer layer added to the screen. Also, the cooling systems for models with RTX 3060, 3070 and 3080 GPUs come with a vapor chamber to handle the heat generated; the vapor chamber also adds a little weight. The X1 Extreme’s RTX 3080 can draw 80 watts of power. Other discrete GPU options use between 35 and 80 watts. Models having the RTX 3070 or RTX 3080 GPU need a large, 230-watt charger, weighing 978 gm or 30.95 oz., which is normal for a charger of that capacity. Models with other RTX GPUs use 170-watt chargers, which are somewhat smaller, and, if you do not need a discrete GPU, a model with integrated graphics is available. The model without a discrete GPU uses a substantially smaller, 135-watt charger. Model availability may vary, based on your region. The size of the charger is not a major issue for me, because I sometimes work on other laptops; I routinely carry a 230-watt charger as well as a USB-C charger in my laptop bag.

The vapor chamber greatly enhances the cooling, and is necessary for the more-powerful GPUs, but it does take up more space inside the case. The trade-off is that models having the vapor chamber do not have a second SSD slot, nor do they have a WWAN option.

Unboxing and first look

Figure 1: Top cover

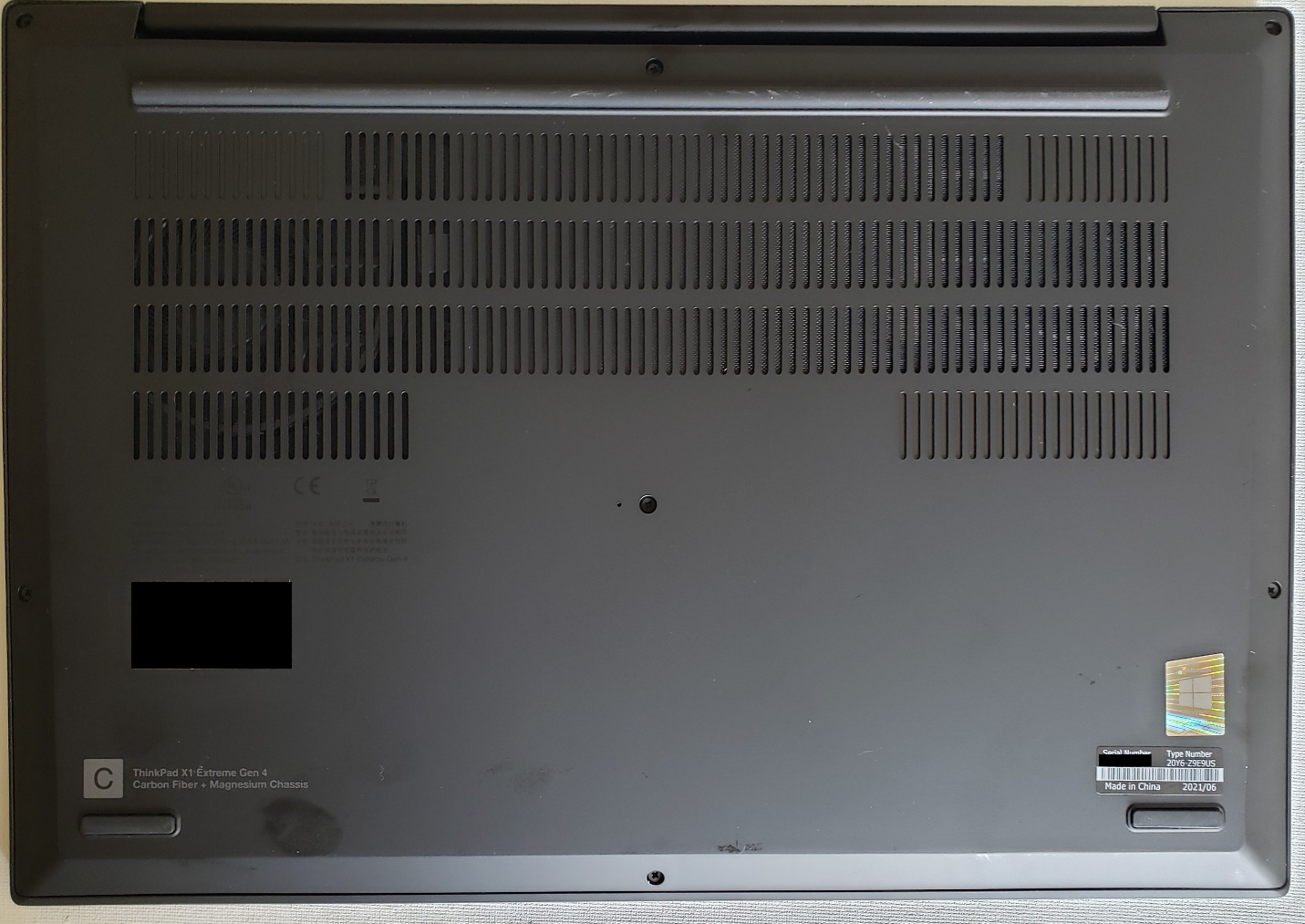

Figure 2: Bottom Cover

Figure 3: Left Side Ports

Figure 4: Right Side Ports

My first impressions were positive. After unpacking, before I turned it on, I inspected the machine for any shipping damage or visible defects and found none.

I set the machine up and plugged it in. I booted into the BIOS to set the date and time, and to confirm that the processor, memory and disk storage were correct. I then booted normally and connected the machine to my Lenovo ID and Microsoft ID. I installed Office 365 and tested those applications. As I expected, everything worked correctly; Office tends to run on any Windows machine. Being a business computer, the machine supports TPM 2.0 for data encryption and it also has a fingerprint reader integrated with the power button. I normally put passwords on through the BIOS, but I decided to wait. My test machine has a pre-release BIOS, and I will wait with passwords until there is a final version. I turned on “Windows Hello”. Using only the IR camera for Windows Hello, everything worked perfectly. I know that with Windows Professional and a TPM chip, Windows seems to be turning on BitLocker by default. That is not a feature I want or think I need, especially with an OPAL2.0 (hardware encrypted) drive and a disk password. I turned BitLocker off. (TPM availability may vary by region due to import and export restrictions on cryptography.)

I liked the keyboard. There was a clear, positive feedback when the key “clicked”. On many machines with a short key travel, I am never certain that a key stroke has registered. I also had no problem with “key bounce”, where the user tries to hit a key once and ends up with two (or more) of the same character. The area around the keyboard was slightly depressed, presumably to eliminate any problems with keyboard marks on the LCD. The keys never stick up above the level of the keyboard bezel.

The sound quality was excellent. There are large, user-facing Dolby ATMOS speakers on both sides of the keyboard. I tested the microphone and webcam on a video call. Everything worked fine. I could move around the room and my voice was still clearly heard.

Figure 5: Keyboard and Speakers

The machine looks like a traditional Lenovo ThinkPad.

Figure 6: X1 Extreme Running

The X1 Extreme uses DDR4 memory, which runs at 1.2V, and it supports a new standby power mode, available in the 7th generation and later Core processors. This power state needs far fewer refresh cycles in standby mode, thus lowering the power drain while the machine is sleeping. I ran a few tests simply to be sure that the various ports were working. I tried both the wireless and Ethernet connections, Bluetooth, the SD card reader, the HDMI port, all the USB ports and I charged the battery. Everything worked fine. Note: There is no Ethernet port, so I used a USB-C to Ethernet adapter (not included with my model). My model has a touch screen that supports an active pen. A Lenovo Pen Pro was included with the computer, and it worked perfectly. A short USB-C to USB-C cable was also included. I used the cable to charge the battery in the pen. Accessories may vary, based on model and geographic region. An Ethernet adapter, such as I used, may be included on some models, and can be ordered separately from Lenovo.

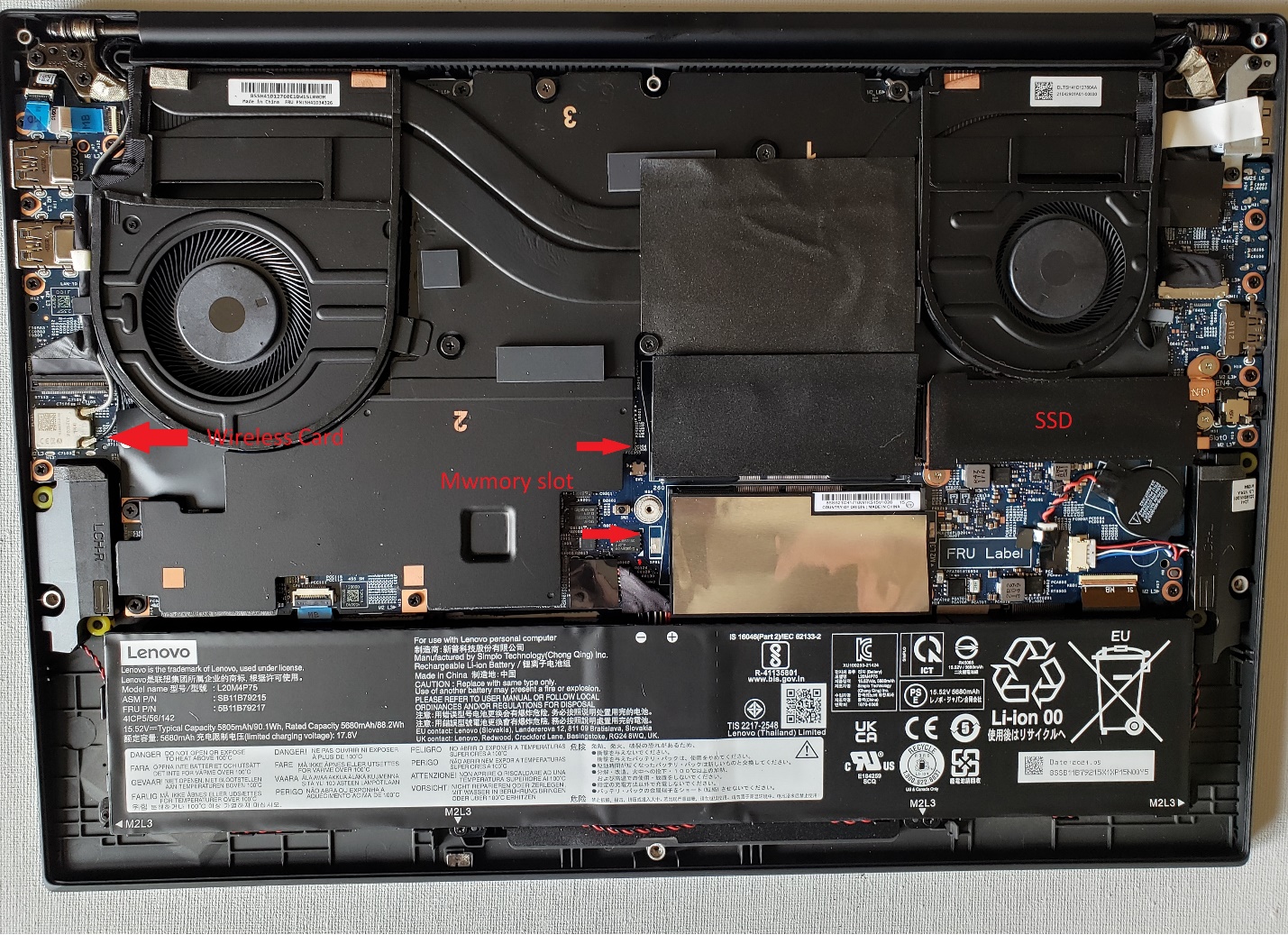

People frequently ask me about “upgradability”. In general, laptops are less upgradable than desktops, and compact laptops are often less upgradable than larger ones. As computers get smaller and thinner, memory, CPUs and sometimes even disk storage and wireless cards cannot be changed as they are integrated into or soldered onto the motherboard. In the ThinkPad X1 Extreme Generation 4, the CPU, GPU and wireless cards are soldered, but the memory and the SSD(s) are separate cards. The battery is inside the machine and can be logically disconnected for service, via the BIOS, without opening the case. I suggest that you would be better off not opening the machine unless you really need to do it. Having said that, the bottom cover is held on by 7 captive (they do not come out) screws. The lid lifts off from the hinge side. There are tiny tabs all around, and if you are not familiar with this sort of cover, it is easy to bend or break the tabs. Once the tabs are bent or broken, the cover will never fit correctly. I believe that you should buy a machine with a disk and memory big enough for your needs, rather than planning to upgrade later. If you really want to know how it looks inside, you can examine my picture. Inside, the memory slots and SSD are easily accessible. The SSD is shielded with a metal cover.

Figure 7: Inside view

There are two large cooling fans with a large vapor chamber and traditional heat pipes. I have read that the fans can draw air in through the keyboard, but I did not try to disassemble anything to confirm this fact. Models without a vapor chamber have a smaller fan and have a second SSD slot and an optional WWAN slot near the left fan.

Benchmark and stress Testing

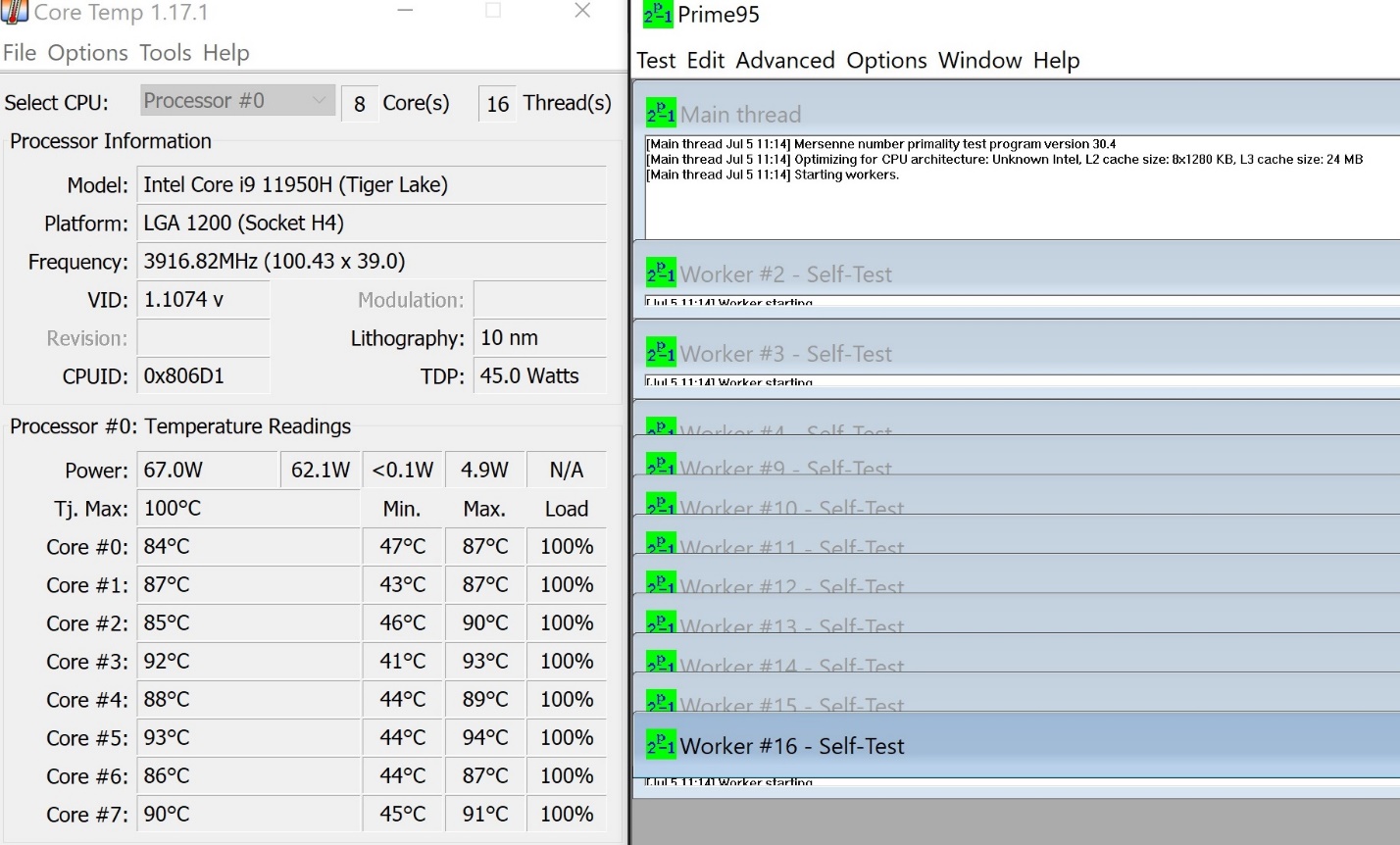

I always want to be sure that I will not run into a problem related to overheating or excessive fan noise. For testing, I often use CoreTemp to monitor temperatures. I was worried about temperatures because the X1 Extreme’s i9 processor has eight-cores, draws 45 watts, and will run sixteen simultaneous threads. All this is packed into a thin case. I ran Prime95 to exercise the CPU as I monitored the temperatures. Prime95 is not a performance test, but rather a torture test to confirm CPU stability under extreme loads. The program starts long, demanding processes in all available CPU threads. Almost immediately, the temperature rose to 95˚C, which is hot, but not dangerously high. The fan speeded up and, with 16 threads running, temperatures stayed between 83˚C and 93˚C. The fan noise was noticeable, but not horrible.

Stress testing

Figure 8: Prime95 Stress Test

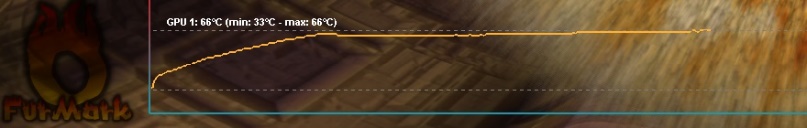

The X1 Extreme also has a very powerful graphics processor (NVIDIA GeForce RTX 3080) that can use 80 watts of power, which is more than the CPU draws. I have never used a GPU that powerful in any laptop, especially one in a thin case. I always use Furmark to determine frame rates and to compare multiple machines, but Furmark can also do a stress test and show GPU temperatures. I thought it prudent to include that kind of test.

Figure 9: Furmark Stress Test

The graph is displayed at the bottom of the Furmark screen while it is running. I ran for about 10 minutes and the GPU temperature rose to 66˚C, but no higher. The fan did run, as expected, but GPU temperatures seemed not to be a problem. The GPU temperature was lower than I had expected, presumably due to the efficiency of the vapor chamber.

Testing programs, like Prime95 and Furmark, stress a computer in ways that are not like normal tasks for most people. For someone who spends all day editing full-motion video or playing the most demanding games, a laptop can never rival the performance of a specialized desktop computer or workstation, but the X1 Extreme Gen 4 seems to be an excellent, portable substitute. For my use, the X1 Extreme seems perfect.

I always try to compare the performance of a machine I am reviewing with something similar, but in this case, I decided that a three-way comparison would be best. My reasons are that most people already own a computer. Those reading reviews, who are considering buying a new machine, will fall into two groups, those considering an upgrade of an older, similar model, and those comparing features of various new machines. With that in mind, I will compare the X1 Extreme with a two-year-old ThinkPad P1 which has a 9th generation, H-series, i7 CPU. My P1 also has the optional OLED screen, so I will see how the IPS screen in the X1 Extreme performs in comparison. I hope the P1 testing will help those considering upgrading a similar, but older, machine. I will also compare the X1 Extreme with a fast X1 Titanium Yoga, having an 11th generation i7-1180G7, a new, but low-power, processor. The X1 Titanium also uses integrated graphics. This second comparison will help other interested parties decide between models with low-power CPUs and the higher-power H-series processors. Also, it will show the difference between machines with integrated graphics and those with discrete GPUs. Before I started, I expected the X1 Extreme to be the fastest of the three machines. The 45-watt CPU should outperform the low-power CPU in the X1 Titanium Yoga and the P1 has a 9th generation Core i7. Across the entire line of CPUs, the 10 NM, 11th generation CPUs have yielded significant improvements, so I expect the same will apply in the comparison of the H-series processors in the X1 Extreme and the two-year-old P1.

There is one caveat to add. The X1 Extreme has pre-release versions of the BIOS and systems software. When final, customer-level software and firmware are released, testing scores may improve.

Performance testing

I like to use Novabench to test overall performance. It does a reasonable job and is free. I always try to use only free software when I am doing any reviews so readers may try to duplicate my tests. One problem with free tools is that they are less likely to work with very-recently released hardware. In this case, Novabench was not yet compatible with the mobile RTX 3080 GPU in the X1 Extreme. As a result, I used the option to skip the GPU tests on all three machines being compared. The graphics test will be done using Furmark.

Figure 10: Novabench

The CPU and disk scores on the X1 Extreme were, by far, the highest of the three, as expected. The three machines all had different amounts of memory. I have no knowledge of how that fact affects the assigned memory score. The transfer speed was highest on the X1 Extreme by nearly 50%, but the score on the P1 which had the most memory was slightly higher. I cannot explain it; you can draw your own conclusions.

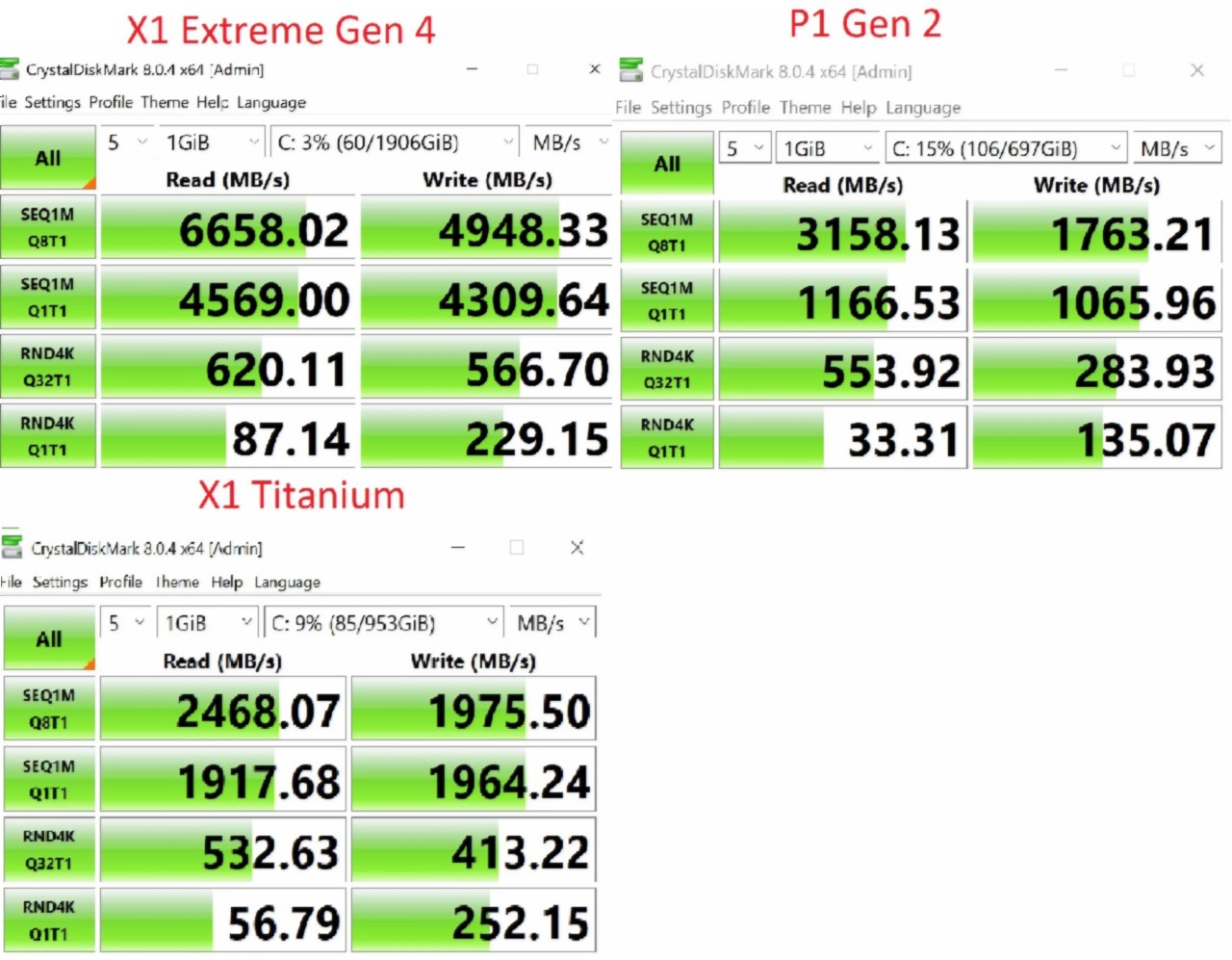

I assume the higher disk scores are primarily attributable to the fact that the X1 Extreme Gen 4 has a PCIe Gen 4 Performance SSD. In order to quantify the improvement and to try to see what types of accesses benefitted the most, I ran another test. This time, I used CrystalDiskMark, another freeware tool I like, which breaks out disk performance by types of tasks. CrystalDiskMark executes input (read) and output (write) operations of different types, times them and generates a score.

Figure 11: CrystalDiskMark

As you can see the PCIe Gen 4 Performance SSD was much faster than all the others, with the exception of one type of “write” operation where transfer speed was less important. In that case, the X1 Titanium was slightly faster. In the left column, there are tests shown as “seq” or sequential, or “rnd” or random, where smaller files that are not logically associated are read or written. Sequential reads would be used for things like loading a large picture; Random reads would be used for things like system start-up, where many small drivers are read and then loaded so that devices can be started. A heading like SEQ1M would mean sequentially reading or writing a 1-megabyte file. The next line might show Q8T1, meaning eight requests are queued up to be processed by one thread. Reading or writing is often more efficient if multiple requests can be queued up so that the SSD can process them at its own pace. Sometimes, multiple requests cannot be queued. An example might be some start-up operations where one small driver must be completely loaded before another one can be started. Windows keeps track of these dependencies. The faster transfer rate of the PCIe Gen 4 interface had the biggest impact on sequential operations. The PCIe gen 4 interface is simply twice as fast as generation 3. I do not know why the X1 Titanium was a little faster for the write operations labeled “RND 4K Q1T1” (Random access, 4 kilobyte blocks, one request queued, running in one thread). I would expect that sort of access might be used when unpacking a large zip file containing many small files.

There is a lot more information available on interpretation of CrystalDiskMark scores available on the internet. You may search for it if you are interested.

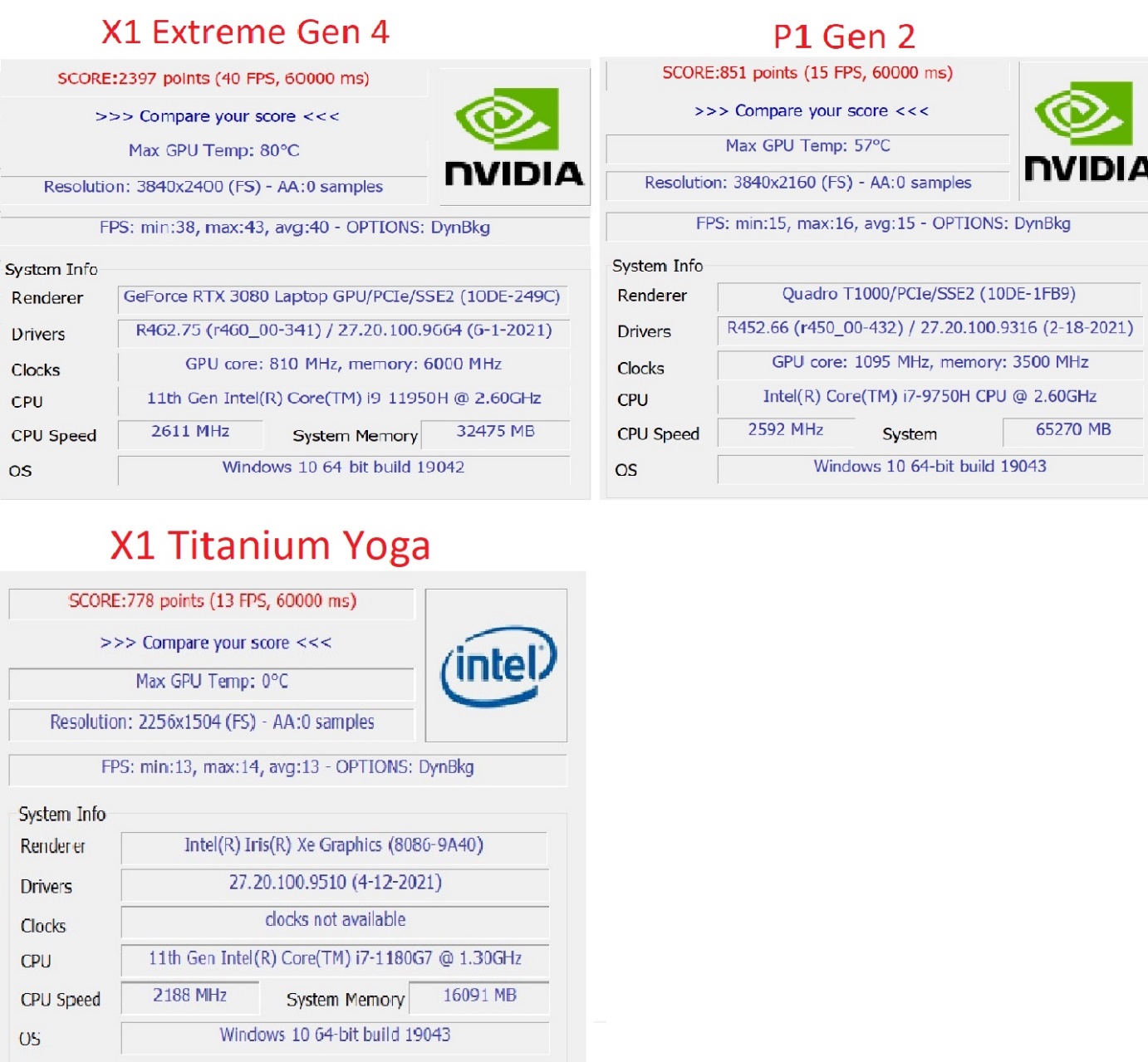

I used Furmark to test graphics performance. Furmark does a one-minute test with a complicated set of rotations and gyrations. It then generates a full-motion graphic. The three computers in the test had different screen resolutions, so there was no way to get a completely equal test. More pixels mean more work. To complicate matters, The X1 Extreme has a 16:10 aspect ratio while the P1 has a 16:9 screen. The X1 Titanium’s aspect ratio is 3:2. The X1 Extreme had the most pixels, 3280 X 2400. The P1 screen (16:9) is next with 3840 X 2160, and the X1 Titanium’s screen has 2256 X 1504. After some thought, I decided that running each machine using its full-screen native resolution was as good a way to test as any. Before starting the test, I expected the X1 Extreme, with its RTX 3080 GPU, would be the fastest and the X1 Titanium Yoga, running with integrated graphics and no discrete GPU, would be the slowest.

Figure 12: Furmark Graphics test

I was surprised by how much faster the X1 Extreme was. Its frame rate was nearly 2 ½ times that of the P1, even though it generated more pixels per frame.

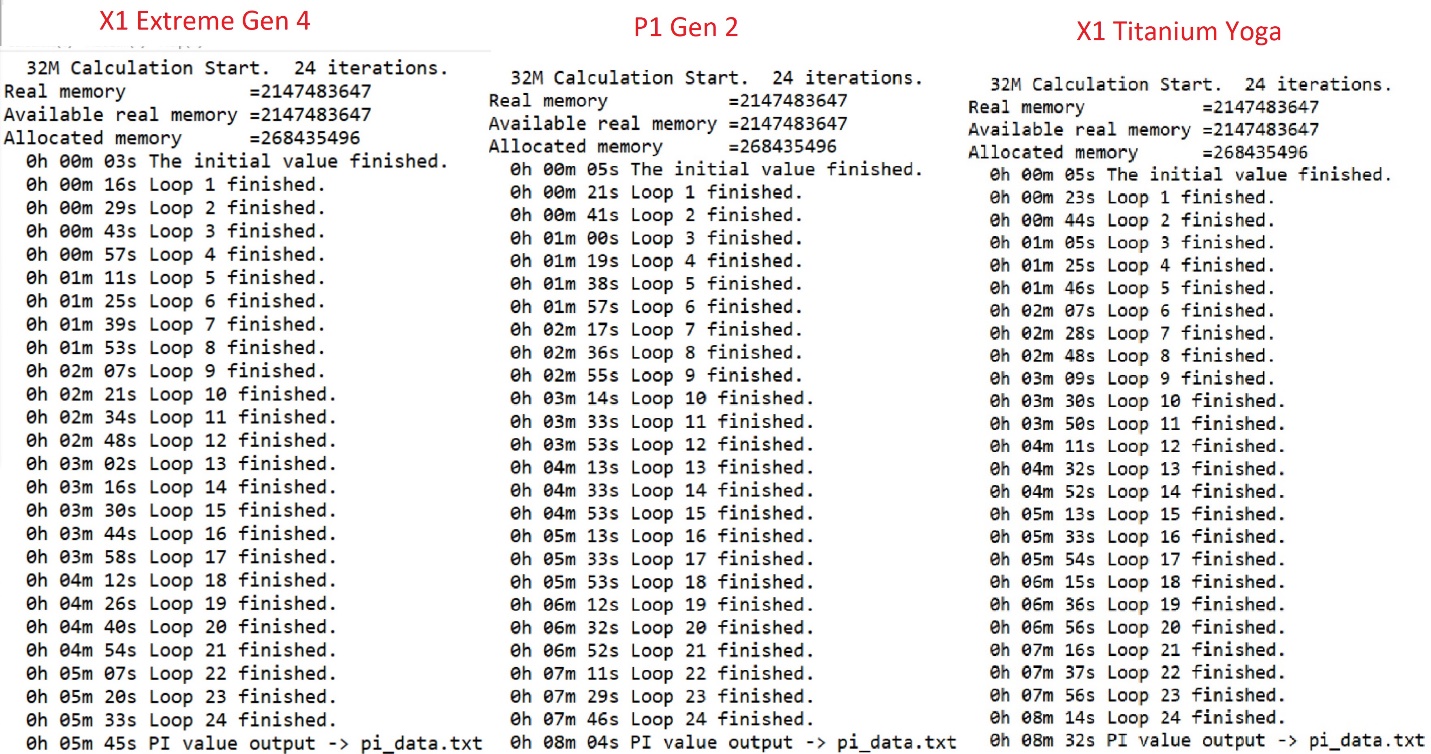

I also like to run a single-thread performance test to see how well a machine will perform when doing computations that cannot be spread out among multiple threads. Also, some older software programs (and some new programs) are not designed to use multiple threads. Often, CPUs with more cores have a lower base clock speed, so one would expect them to perform less well on a single-thread test. “Normally”, does not always apply. All three machines are new enough to employ Turbo Boost, a feature that lets CPUs work faster than they are expected to run. A single, demanding thread can “borrow” cycles from less-used threads to make single-threaded applications run faster than the clock speed would suggest. The program I use for single-thread CPU testing is SuperPi. This program forces the use of a single thread and calculates the first 32,000,000 digits of Pi. It goes through the process 24 times. One thing I look for is the change in elapsed times on the multiple iterations of the calculation. If an iteration takes longer, I attribute the change to throttling as the temperatures increase due to the heavy calculation. For your information, the X1 Extreme has a maximum Turbo speed of 5 gHz; the P1 has a maximum Turbo speed of 4.5 gHz, and the X1 Titanium Yoga has a maximum Turbo speed of 4.6 gHz.

Figure 13: Single Thread Performance

As expected, the X1 Extreme was the fastest. It finished the test in 5.75 minutes vs. 8.07 minutes for the P1. The X1 Titanium, with its low power CPU was the slowest, almost ½ minute slower than the P1. By the way, when I reviewed the X1 Titanium, at its introduction, it was (and still is) the fastest low-power machine I have ever used. The other machines simply use more electricity. Low-power laptops are fine for most tasks; computers with H-series CPUs are able to do types of things that had traditionally been limited to desktop computers. Most notable to me was the fact that on the X1 Extreme, the elapsed times for the various iterations did not increase after the temperatures had a chance to rise. The first cycle took about 13 seconds, and then they consistently took either 13 or 14 seconds, so there was no excessive throttling.

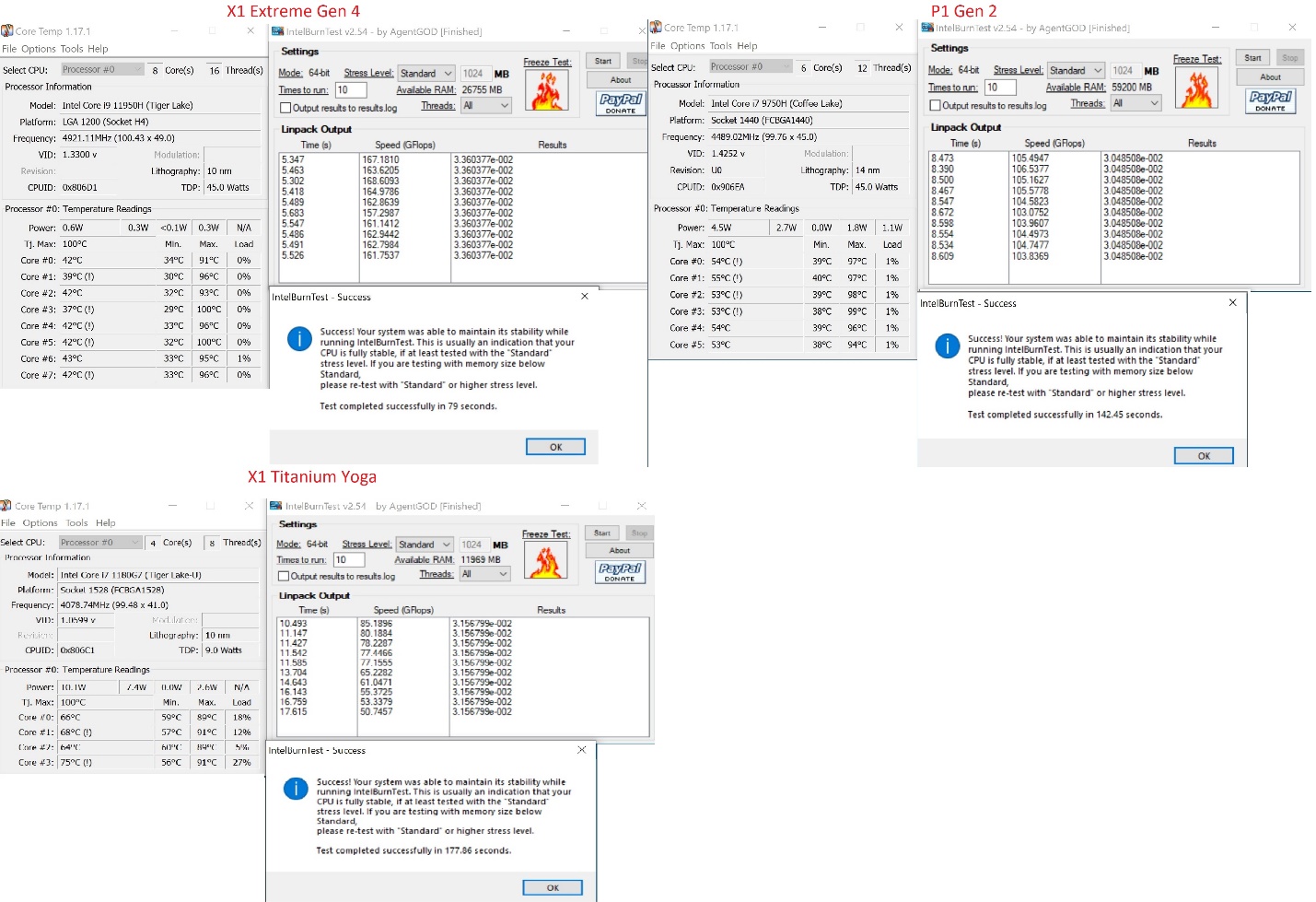

Earlier, I had run Prime95 to confirm that the X1 Extreme was able to survive a stress test in all threads and remain cool enough to be stable. I sometimes use a different program, Intel Burn Test, for the same purpose. Intel Burn Test goes through an extensive set of algorithms and calculates some number and compares the calculated number to an expected result. It then repeats the process 10 times and announces that, if your machine survived the test, it should be stable doing any real work. In addition, however, it indicates how long each of the iterations took. Less time would indicate a faster processor. If the times get longer as you go through the 10 cycles, it indicates that the CPU is being throttled down due to heat. With that in mind, I decided to run the program on the X1 Extreme to see if I could learn something. I used Core Temp to monitor temperatures. I also ran the test on the two other machines I had been testing.

Figure 14: Intel Burn Test

Looking at the results, the run times seemed to depend mostly on the number of cores of the CPU. The fastest, by quite a bit, was the X1 Extreme, which had 1/3 more cores than the P1 and double the number in the X1 Titanium.

Display discussion

I had said earlier that I would compare the OLED screen in the P1 with the high-resolution touchscreen in the X1 Extreme. First, I will say that I have always been attracted to OLED technology. My first experience was standing in front of an early example at a trade show several years back. I simply stood there and stared. I was not sure what I liked; it just seemed better than any screen I had ever seen. I now know that the difference is due to the fact that OLED screens can display black areas that are truly black.

Like many people I buy products at one of the warehouse clubs. The store’s entrance is located so that it is necessary to walk past the television sets, where they have different models arranged in a row, one of which always has an OLED screen. That set always looks better to me.

My screen comparison is subjective and depends on my observations and preferences. When I first tried the X1 Extreme, the black areas really looked black to me. That was the first non-OLED screen I had seen where I had that impression. Now that I have used my OLED P1 for a little more than a year, I know that there are some negatives. I know that OLED screens can be subject to “burn-in”, where, if something is displayed in the same location for an extended period, a faint image is “remembered”. Burn-in is not an issue for a television or a phone as screen content is constantly changing, but desktop icons are a problem on a computer. Another problem with currently produced OLED screens in a portable computer is that they seem to use more power than an LCD, lowering battery run time. I tried to quantify the power issue. My OLED-based P1 and the LCD-based X1 Extreme test unit both have high-resolution screens. They both have 45-watt processors. I tested using integrated graphics so that the higher-power GPU in the X1 Extreme would not be an issue. I do not have any tools to test battery life objectively, but I decided to do something simple. The X1 Extreme’s battery is new and my P1 has been mostly used while connected to AC. According to Lenovo Vantage my P1’s battery holds a full charge, the same as a new battery. Before starting, I charged both batteries to 98% and, set the brightness to 80% and the sound to 60% levels. I have an MP4 movie that I played on both machines; the run time is 1:47. I played the whole movie while running both machines on battery. At the end, the charge percentage remaining was 70% on the X1 Extreme and 52% on the P1. I should add that nearly half of the difference can be attributable to the fact that the X1 Extreme has a 90 watt-hour battery, while the P1 Gen2 has an 80 w-hr battery.

I also stood the two computers next to each other, the way televisions are arranged at the warehouse club. The black on the OLED screen was still “purer”, but, from my subjective point of view, the two were very close. When I looked at the two laptops separately, I did not notice a difference. I think that if I were buying a laptop and both screens were available, considering all the factors, I would choose the screen in the X1 Extreme. OLED may be a better choice for a television or a phone, or in a specialty device, like one with a foldable or curved screen, but it probably is not the best option for a “normal” computer at this time. I do believe that if you examine the way an OLED screen generates an image, that technology or something like it will eventually be the way all computer screens are made, much the same way that LED backlights replaced CCFL backlights or that LCD screens replaced CRTs. I think the time for the transition is not here yet. I wrote a simplified comparison of the two technologies and how they work at the end of a review I wrote for the X1 Fold. If you are interested, you can find it here:

Final analysis

There is always something that I would like to see changed in the next model. In this case, I had a really hard time coming up with something to suggest. I think the screen is great. The machine is fast; it has all the ports I would normally use. Memory is ample and could be expanded. My P1 has two SSD slots and I sometimes use that fact to initialize or test a drive. I realize that space is at a premium and that an efficient cooling system is necessary to support a powerful GPU, but I wish there were a second slot, as there is in models with lesser GPUs. Actually, this is not a major problem, as I can use a USB-attached adapter. Perhaps there could be enough room to allow models with a single SSD slot to use a double-sided SSD. That would give the single-slot models the same 4 TB capacity currently available in the two-slot models. The double-sided idea would not help with my spare slot problem, but it would help users who needed a huge amount of SSD storage.

I think the ThinkPad X1 Extreme Generation 4 would be an excellent choice for anyone needing a thin and lightweight, but high-powered, traditional business laptop.

The following section on screen shape can be skipped if you wish. I wrote much of it earlier this year for a review of another laptop. I have added some additional material regarding the change between generation three and generation four of the X1 Extreme. I will say, however, that my knowledge of this subject is only rudimentary and nothing here is highly technical. I suggest that you at least look at the images where I compare the different shapes.

The aspect ratio of a screen is the relationship between the width and the length of a screen’s viewing area. Early computers used the same sort of CRT displays that were used in televisions. Their screens were taller than they were wide. A common resolution was 640 X 480, meaning that there were 640 rows and 480 columns of dots. The ratio of the number of rows to columns was 4:3. At that time, televisions were the same 4:3 shape and proportions. About 10 years ago, computer screens were changed to be wider than they were tall, having an aspect ratio of 16:10 (the ratio of width to length.) Although the tall screens are commonly referred to as having a 4:3 aspect ratio, for my comparisons I will refer to the tall screens as 3:4 for consistency, so that all the ratios are listed as width vs. height.

About 2 years after the wide laptop screens became standard, some television programming began using High Definition (HD) format (1280 X 720), which has a 16:9 aspect ratio. At that time, laptop screens were mostly changed from 16:10 to 16:9. The shorter, wider screens were great for watching made-for-television videos, but they were awkward for people doing a lot of word processing or computer programming, where having more lines of text visible at one time was an advantage.

Movies made to be shown in theatres are commonly photographed in an ultrawide format, having a 21:9 aspect ratio. There are also some computer screens using this format. These devices are commonly used by people who work with ultrawide video and by some gamers.

This discussion is relevant because the X1 Extreme Gen 4 has a screen resolution of 3840 X 2400, which has the proportion of 16:10. Last year’s Gen 3 model had a 16:9 screen.

I produced an image to show the various shapes superimposed, overlaying each other for comparison. Screens come in various sizes, but I picked one arbitrarily and measured the surface area. I then calculated the dimensions for screens of different shapes but having the same viewing space surface area using simple algebra.

Figure 15: Aspect ratios

I included the old-style 3:4 screen and the uncommon, ultra-wide, 21:9, screens for completeness. It seems obvious that a taller screen can display more lines of text than a shorter one. Actually, there are many more factors. Some people simply like larger text. Some people face ocular challenges and older people often need larger text. Beyond personal differences, there are relevant screen differences. Higher resolution screens are sharper, so many people will choose smaller text. Also, a brighter screen may be easier to read. While a 400-nit screen, like the one on my P1 Generation two, is considered very bright, the X1 Extreme model I tested has a 600-nit screen. A nit is defined as 1 candela per square meter (cd/m2). That definition is useless to me except for the fact that “more nits” means brighter. It is usually easier to read smaller text if it is brighter.

The generation four X1 Extreme has a 16” screen with a resolution of 3980 X 2400. The generation three model and my P1 used in the review have a 15.6” screen with a resolution of 3980X2160. The width is the same, so the new, taller model has a larger surface area. The physical size of the screen is almost identical. The additional pixels in the vertical direction fit because the X1 Extreme’s bezel is narrower at the bottom. I am including another graphic to show the relative sizes.

Figure 16: Relative screen sizes